Parsing and Parse Trees

(Content adapted from Critchlow & Eck)

Suppose that is a grammar for the language . That is, . The grammar can be used to generate strings in the language . In practice, though, we often start with a string which might or might not be in , and the problem is to determine whether the string is in the language and, if so, how it can be generated by . The goal is to find a derivation of the string, using the production rules of the grammar, or to show that no such derivation exists. This is known as parsing the string. When the string is a computer program or a sentence in a natural language, parsing the string is an essential step in determining its meaning.

As an example that we will use throughout this section, consider the language that consists of arithmetic expressions containing parentheses, the binary operators and , and the variables , , and . Strings in this language include , , and . Here is a context-free grammar that generates this language:

Call the grammar described by these production rules . The grammar says that , , and are expressions, and that you can make new expressions by adding two expressions, by multiplying two expressions, and by enclosing an expression in parentheses. (Later, we'll look at other grammars for the same language—ones that turn out to have certain advantages over .)

Consider the string . To show that this string is in the language , we can exhibit a derivation of the string from the start symbol . For example:

This derivation shows that the string is in fact in . Now, this string has many other derivations. At each step in the derivation, there can be a lot of freedom about which rule in the grammar to apply next. Some of this freedom is clearly not very meaningful. When faced with the string in the above example, the order in which we replace the 's with the variables , , and doesn't much matter. To cut out some of this meaningless freedom, we could agree that in each step of a derivation, the non-terminal symbol that is replaced is the leftmost non-terminal symbol in the string. A derivation in which this is true is called a left derivation. The following left derivation of the string uses the same production rules as the previous derivation, but it applies them in a different order:

It shouldn't be too hard to convince yourself that any string that has a derivation has a left derivation (which can be obtained by changing the order in which production rules are applied).

We have seen that the same string might have several different derivations. We might ask whether it can have several different left derivations. The answer is that it depends on the grammar. A context-free grammar is said to be ambiguous if there is a string such that has more than one left derivation according to the grammar .

Our example grammar is ambiguous. In fact, in addition to the left derivation given above, the string has the alternative left derivation

In this left derivation of the string , the first production rule that is applied is . The first on the right-hand side eventually yields "" while the second yields "". In the previous left derivation, the first production rule that was applied was , with the first on the right yielding "" and the second yielding "". If we think in terms of arithmetic expressions, the two left derivations lead to two different interpretations of the expression . In one interpretation, the is a unit that is multiplied by . In the second interpretation, the is a unit that is added to . The second interpretation is the one that is correct according to the usual rules of arithmetic. However, the grammar allows either interpretation. The ambiguity of the grammar allows the string to be parsed in two essentially different ways, and only one of the parsings is consistent with the meaning of the string. Of course, the grammar for English is also ambiguous. In a famous example, it's impossible to tell whether a "pretty girls' camp" is meant to describe a pretty camp for girls or a camp for pretty girls.1

When dealing with artificial languages such as programming languages, it's better to avoid ambiguity. The grammar is perfectly correct in that it generates the correct set of strings, but in a practical situation where we are interested in the meaning of the strings, is not the right grammar for the job. There are other grammars that generate the same language as . Some of them are unambiguous grammars that better reflect the meaning of the strings in the language. For example, the language is also generated by the BNF grammar

This grammar can be translated into a standard context-free grammar, which I will call :

The language generated by consists of all legal arithmetic expressions made up of parentheses, the operators and , and the variables , , and . That is, . However, is an unambiguous grammar. Consider, for example, the string . Using the grammar , the only left derivation for this string is:

There is no choice about the first step in this derivation, since the only production rule with on the left-hand side is . Similarly, the second step is forced by the fact that there is only one rule for rewriting a . In the third step, we must replace an . There are four ways to rewrite , but only one way to produce the that begins the string , so we apply the rule . Now, we have to decide what to do with the in . There are two rules for rewriting , and . However, the first of these rules introduces a terminal, , which does not match the string we are trying to parse. So, the only choice is to apply the production rule . In the next step of the derivation, we must apply the rule in order to account for the in the string . Similarly, each of the remaining steps in the left derivation is forced.

The fact that is an unambiguous grammar means that at each step in a left derivation for a string , there is only one production rule that can be applied which will lead ultimately to a correct derivation of . However, actually satisfies a much stronger property: at each step in the left derivation of , we can tell which production rule has to be applied by looking ahead at the next symbol in . We say that is an LL(1) grammar. (This notation means that we can read a string from Left to right and construct a Left derivation of the string by looking ahead at most 1 character in the string.) Given an LL(1) grammar for a language, it is fairly straightforward to write a computer program that can parse strings in that language. If the language is a programming language, then parsing is one of the essential steps in translating a computer program into machine language. LL(1) grammars and parsing programs that use them are often studied in courses in programming languages and the theory of compilers. For more details on how to turn an LL(1) grammar into code, see the section on recursive descent and parser combinators.

Not every unambiguous context-free grammar is an LL(1) grammar. Consider, for example, the following grammar, which I will call :

This grammar generates the same language as and , and it is unambiguous. However, it is not possible to construct a left derivation for a string according to the grammar by looking ahead one character in the string at each step. The first step in any left derivation must be either or . But how can we decide which of these is the correct first step? Consider the strings and , which are both in the language . For the string , the first step in a left derivation must be , while the first step in a left derivation of must be . However, the first seven characters of the strings are identical, so clearly looking even seven characters ahead is not enough to tell us which production rule to apply. In fact, similar examples show that looking ahead any given finite number of characters is not enough.

However, there is an alternative parsing procedure that will work for . This alternative method of parsing a string produces a right derivation of the string, that is, a derivation in which at each step, the non-terminal symbol that is replaced is the rightmost non-terminal symbol in the string. Here, for example, is a right derivation of the string according to the grammar :

The parsing method that produces this right derivation produces it from "bottom to top." That is, it begins with the string and works backward to the start symbol , generating the steps of the right derivation in reverse order. The method works because is what is called an LR(1) grammar. That is, roughly, it is possible to read a string from Left to right and produce a Right derivation of the string, by looking ahead at most 1 symbol at each step. Although LL(1) grammars are easier for people to work with, LR(1) grammars turn out to be very suitable for machine processing, and they are used as the basis for the parsing process in many compilers.

LR(1) parsing uses a shift/reduce algorithm. Imagine a cursor or current position that moves through the string that is being parsed. We can visualize the cursor as a vertical bar, so for the string , we start with the configuration . A shift operation simply moves the cursor one symbol to the right. For example, a shift operation would convert to , and a second shift operation would convert that to . In a reduce operation, one or more symbols immediately to the left of the cursor are recognized as the right-hand side of one of the production rules in the grammar. These symbols are removed and replaced by the left-hand side of the production rule. For example, in the configuration , the to the left of the cursor is the right-hand side of the production rule , so we can apply a reduce operation and replace the with , giving . This first reduce operation corresponds to the last step in the right derivation of the string, . Now the can be recognized as the right-hand side of the production rule , so we can replace the with , giving . This corresponds to the next-to-last step in the right derivation, .

At this point, we have the configuration . The could be the right-hand side of the production rule . However, it could also conceivably come from the rule . How do we know whether to reduce the to at this point or to wait for a to come along so that we can reduce ? We can decide by looking ahead at the next character after the cursor. Since this character is a rather than a , we should choose the reduce operation that replaces with , giving . What makes an LR(1) grammar is the fact that we can always decide what operation to apply by looking ahead at most one symbol past the cursor.

After a few more shift and reduce operations, the configuration becomes , which we can reduce to by applying the production rules and . Now, faced with , we must once again decide between a shift operation and a reduce operation that applies the rule . In this case, since the next character is a rather than a , we apply the shift operation, giving . From there we get, in succession, , , , and finally . At this point, we have reduced the entire string to the start symbol of the grammar. The very last step, the reduction of to corresponds to the first step of the right derivation, .

In summary, LR(1) parsing transforms a string into the start symbol of the grammar by a sequence of shift and reduce operations. Each reduce operation corresponds to a step in a right derivation of the string, and these steps are generated in reverse order. Because the steps in the derivation are generated from "bottom to top," LR(1) parsing is a type of bottom-up parsing. LL(1) parsing, on the other hand, generates the steps in a left derivation from "top to bottom" and so is a type of top-down parsing.

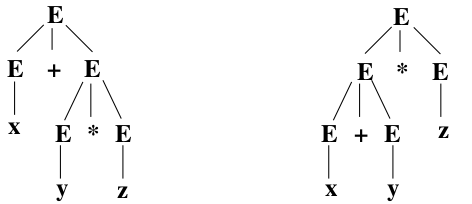

Although the language generated by a context-free grammar is defined in terms of derivations, there is another way of presenting the generation of a string that is often more useful. A parse tree displays the generation of a string from the start symbol of a grammar as a two dimensional diagram. Here are two parse trees that show two derivations of the string x+y*z according to the grammar , which was given at the beginning of this section:

A parse tree is made up of terminal and non-terminal symbols, connected by lines. The start symbol is at the top, or "root," of the tree. Terminal symbols are at the lowest level, or "leaves," of the tree. (For some reason, computer scientists traditionally draw trees with leaves at the bottom and root at the top.) A production rule is represented in a parse tree by the symbol lying above all the symbols in , with a line joining to each of the symbols in . For example, in the left parse tree above, the root, , is connected to the symbols , , and , and this corresponds to an application of the production rule .

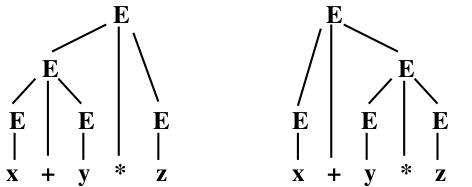

It is customary to draw a parse tree with the string of non-terminals in a row across the bottom, and with the rest of the tree built on top of that base. Thus, the two parse trees shown above might be drawn as:

Given any derivation of a string, it is possible to construct a parse tree that shows each of the steps in that derivation. However, two different derivations can give rise to the same parse tree, since the parse tree does not show the order in which production rules are applied. For example, the parse tree on the left, above, does not show whether the production rule is applied before or after the production rule . However, if we restrict our attention to left derivations, then we find that each parse tree corresponds to a unique left derivation and vice versa. I will state this fact as a theorem, without proof. A similar result holds for right derivations.

Theorem: Let be a context-free grammar. There is a one-to-one correspondence between parse trees and left derivations based on the grammar .

Based on this theorem, we can say that a context-free grammar is ambiguous if and only if there is a string which has two parse trees.

Exercises

Show that each of the following grammars is ambiguous by finding a string that has two left derivations according to the grammar:

a)

b)

Consider the string . Find a left derivation of this string according to each of the grammars , , and , as given in this section.

Draw a parse tree for the string according to each of the grammars , , and , as given in this section.

Draw three different parse trees for the string based on the grammar given in part a) of exercise 1.

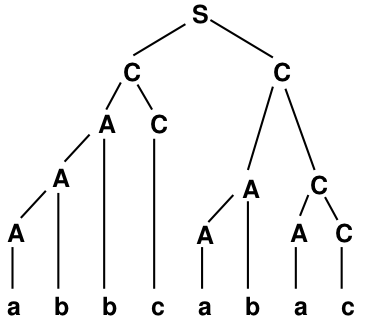

Suppose that the string has the parse tree shown below, according to some grammar .

a) List five production rules that must be rules in the grammar , given that this is a valid parse tree.

b) Give a left derivation for the string according to the grammar .

c) Give a right derivation for the string according to the grammar .

Show the full sequence of shift and reduce operations that are used in the LR(1) parsing of the string according to the grammar , and give the corresponding right derivation of the string.

This section showed how to use LL(1) and LR(1) parsing to find a derivation of a string in the language generated by some grammar . How is it possible to use LL(1) or LR(1) parsing to determine for an arbitrary string whether ? Give an example.

- A related example with many more interpretations is "pretty little girls' school."↩