General Grammars and Turing Machines

(Content adapted from Critchlow & Eck)

General Grammars

At the beginning of this chapter the general idea of a grammar as a set of rewriting or production rules was introduced. For most of the chapter, however, we have restricted our attention to context-free grammars, in which production rules must be of the form where is a non-terminal symbol. In this section, we will consider general grammars, that is, grammars in which there is no such restriction on the form of production rules. For a general grammar, a production rule has the form , where is string that can contain both terminal and non-terminal symbols. For convenience, we will assume that contains at least one non-terminal symbol, although even this restriction could be lifted without changing the class of languages that can be generated by grammars. Note that a context-free grammar is, in fact, an example of a general grammar, since production rules in a general grammar are allowed to be of the form . They just don't have to be of this form. I will use the unmodified term grammar to refer to general grammars.1 The definition of grammar is identical to the definition of context-free grammar, except for the form of the production rules:

A grammar is a 4-tuple , where:

- is a finite set of symbols. The elements of are the non-terminal symbols of the grammar.

- is a finite set of symbols such that . The elements of are the terminal symbols of the grammar.

- is a set of production rules. Each rule is of the form where and are strings in and contains at least one symbol from .

- . is the start symbol of the grammar.

Suppose is a grammar. Just as in the context-free case, the language generated by is denoted by and is defined as . That is, a string is in if and only if is a string of terminal symbols and there is a derivation that produces from the start symbol, , in one or more steps.

The natural question is whether there are languages that can be generated by general grammars but that cannot be generated by context-free languages. A simple example of a language that is not context-free is . This language can be generated by a general grammar that first generates a string of the form , where there are groups of for some . Then we use general grammar rules that allow 's, 's, and 's to switch places, until the string looks like . Finally, another set of rules essentially traverse the string from left to right, changing the non-terminals , , and into the corresponding terminals , , and . Here is a grammar that does this:

Here, the first two rules produce one of the strings , , , , and so on. The next three rules allow 's to move to the left and 's to move to the right, producing a string of the form , for some . The rule allows the to move through the 's from left to right, converting 's to 's as it goes. After converting the 's, the can be transformed into a . The will then move through the 's, converting them to 's. Then, the is transformed into a , which is responsible for converting 's to 's. Finally, an application of the rule removes the , leaving the string .

Note that if the rule is applied before all the 's have been converted to 's, then there is no way for the remaining 's to be converted to 's or otherwise removed from the string. This means that the derivation has entered a dead end, which can never produce a string that consists of terminal symbols only. The only derivations that can produce strings in the language generated by the grammar are derivations in which the moves past all the 's, converting them all to 's. At this point in the derivation, the string is of the form where is a string consisting entirely of 's and 's. Now the rule can be applied, producing the string . Then, if a string of terminal symbols is ever to be produced, the must move past all the 's, producing the string . You can see that the use of three separate non-terminals, , , and , is essential for forcing the symbols in into the correct order.

Turing Machines

We saw hints in the previous section that "computation" is a more general concept than we might have thought. General grammars, which at first encounter don't seem to have much to do with algorithms or computing, turn out to be able to do things that are similar to the tasks carried out by computer programs. In this section, we will see that general grammars are precisely equivalent to computer programs in terms of their computational power, and that both are equivalent to a particularly simple model of computation known as a Turing machine. We shall also see that there are limits to what can be done by computing.

Historically, the theoretical study of computing began before computers existed. One of the early models of computation was developed in the 1930s by the British mathematician, Alan Turing, who was interested in studying the theoretical abilities and limitations of computation. His model for computation is a very simple abstract computing machine which has come to be known as a Turing machine. While Turing machines are not applicable in the same way that regular expressions, finite-state automata, and grammars are applicable, their use as a fundamental model for computation means that every computer scientist should be familiar with them, at least in a general way.

A Turing machine is really not much more complicated than a finite-state automaton or a pushdown automaton.2 Like a FSA, a Turing machine has a finite number of possible states, and it changes from state to state as it computes. However, a Turing machine also has an infinitely long tape that it can use for input and output. The tape extends to infinity in both directions. The tape is divided into cells, which are in one-to-one correspondence with the integers, . Each cell can either be blank or it can hold a symbol from a specified alphabet. The Turing machine can move back and forth along this tape, reading and writing symbols and changing state. It can read only one cell at a time, and possibly write a new value in that cell. After doing this, it can change state and it can move by one cell either to the left or to the right. This is how the Turing machine computes. To use a Turing machine, you would write some input on its tape, start the machine, and let it compute until it halts. Whatever is written on the tape at that time is the output of the computation.

Although the tape is infinite, only a finite number of cells can be non-blank at any given time. If you don't like the idea of an infinite tape, you can think of a finite tape that can be extended to an arbitrarily large size as the Turing machine computes: If the Turing machine gets to either end of the tape, it will pause and wait politely until you add a new section of tape. In other words, it's not important that the Turing machine have an infinite amount of memory, only that it can use as much memory as it needs for a given computation, up to any arbitrarily large size. In this way, a Turing machine is like a computer that can ask you to buy it a new disk drive whenever it needs more storage space to continue a computation.3

A given Turing machine has a fixed, finite set of states. One of these states is designated as the start state. This is the state in which the Turing machine begins a computation. Another special state is the halt state. The Turing machine's computation ends when it enters its halt state. It is possible that a computation might never end because the machine never enters the halt state. This is analogous to an infinite loop in a computer program.

At each step in its computation, the Turing machine reads the contents of the tape cell where it is located. Depending on its state and the symbol that it reads, the machine writes a symbol (possibly the same symbol) to the cell, moves one cell either to the left or to the right, and (possibly) changes its state. The output symbol, direction of motion, and new state are determined by the current state and the input symbol. Note that either the input symbol, the output symbol, or both, can be blank. A Turing machine has a fixed set of rules that tell it how to compute. Each rule specifies the output symbol, direction of motion, and new state for some combination of current state and input symbol. The machine has a rule for every possible combination of current state and input symbol, except that there are no rules for what happens if the current state is the halt state. Of course, once the machine enters the halt state, its computation is complete and the machine simply stops.

I will use the character # to represent a blank in a way that makes it visible. I will always use to represent the halt state. I will indicate the directions, left and right, with and , so that is the set of possible directions of motion. With these conventions, we can give the formal definition of a Turing machine as follows:

A Turing machine is a 4-tuple , where:

- is a finite set of states, including the halt state, .

- is an alphabet which includes the blank symbol, #.

- is the start state.

- is the transition function. The fact that means that when the Turing machine is in state and reads the symbol , it writes the symbol , moves one cell in the direction , and enters state .

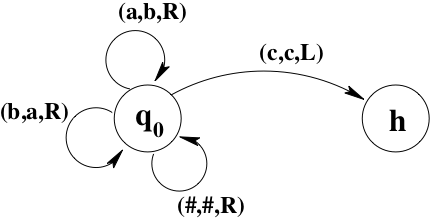

Even though this is the formal definition, it's easier to work with a transition diagram representation of Turing machines. The transition diagram for a Turing machine is similar to the transition diagram for a DFA. However, there are no "accepting" states (only a halt state). Furthermore, there must be a way to specify the output symbol and the direction of motion for each step of the computation. We do this by labeling arrows with notations of the form and , where and are symbols in the Turing machine's alphabet. For example,

indicates that when the machine is in state and reads an , it writes a , moves left, and enters state .

Here, for example, is a transition diagram for a simple Turing machine that moves to the right, changing 's to 's and vice versa, until it finds a . It leaves blanks (#'s) unchanged. When and if the machine encounters a , it moves to the left and halts:

To simplify the diagrams, I will leave out any transitions that are not relevant to the computation that I want the machine to perform. You can assume that the action for any omitted transition is to write the same symbol that was read, move right, and halt.

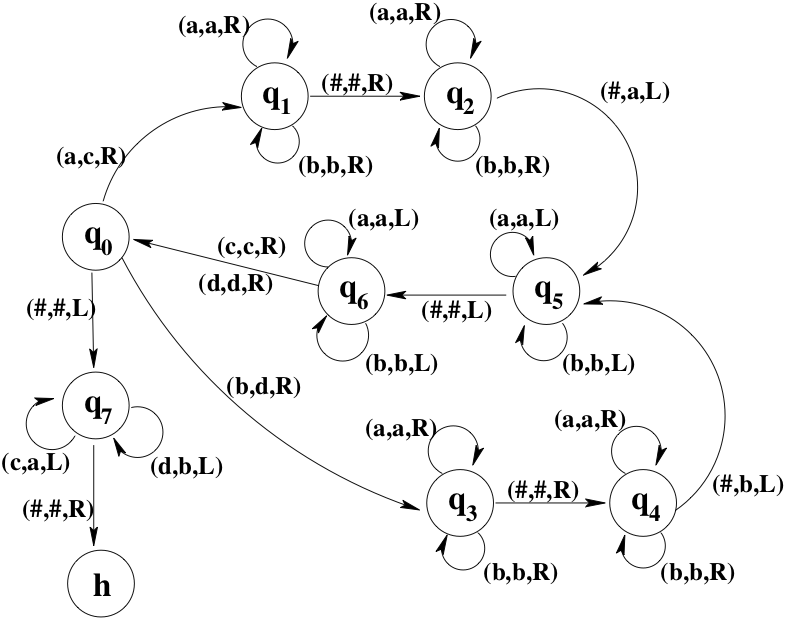

For another example, shown below is a transition diagram for a Turing machine that makes a copy of a string of 's and 's. To use this machine, you would write a string of 's and 's on its tape, place the machine on the first character of the string, and start the machine in its start state, . When the machine halts, there will be two copies of the string on the tape, separated by a blank. The machine will be positioned on the first character of the leftmost copy of the string. Note that this machine uses 's and 's in addition to 's and 's. While it is copying the input string, it temporarily changes the 's and 's that it has copied to 's and 's, respectively. In this way it can keep track of which characters it has already copied. After the string has been copied, the machine changes the 's and 's back to 's and 's before halting.

In this machine, state checks whether the next character is an , a , or a # (indicating the end of the string). States and add an to the end of the new string, and states and do the same thing with a . States and return the machine to the next character in the input string. When the end of the input string is reached, state will move the machine back to the start of the input string, changing 's and 's back to 's and 's as it goes. Finally, when the machine hits the # that precedes the input string, it moves to the right and halts. This leave it back at the first character of the input string. It would be a good idea to work through the execution of this machine for a few sample input strings. You should also check that it works even for an input string of length zero.

Our primary interest in Turing machines is as language processors. Suppose that is a string over an alphabet . We will assume that does not contain the blank symbol. We can use as input to a Turing machine provided that . To use as input for , we will write on 's tape and assume that the remainder of the tape is blank. We place the machine on the cell containing the first character of the string, except that if then we simply place the machine on a completely blank tape. Then we start the machine in its initial state, , and see what computation it performs. We refer to this setup as "running with input ."

When is run with input , it is possible that it will just keep running forever without halting. In that case, it doesn't make sense to ask about the output of the computation. Suppose however that does halt on input . Suppose, furthermore, that when halts, its tape is blank except for a string of non-blank symbols, and that the machine is located on the first character of . In this case, we will say that " halts with output ." In addition, if halts with an entirely blank tape, we say that " halts with output ." Note that when we run with input , one of three things can happen: (1) might halt with some string as output; (1) might fail to halt; or (3) might halt in some configuration that doesn't count as outputting any string.

The fact that a Turing machine can produce an output value allows us for the first time to deal with computation of functions. A function takes an input value in the set and produces an output value in the set . If the sets are sets of strings, we can now ask whether the values of the function can be computed by a Turing machine. That is, is there a Turing machine such that, given any string in the domain of as input, will compute as its output the string . If this is that case, then we say that is a Turing-computable function.

Suppose that and are alphabets that do not contain # and that is a function from to . We say that is Turing-computable if there is a Turing machine such that and and for each string , when is run with input , it halts with output . In this case, we say that computes the function .

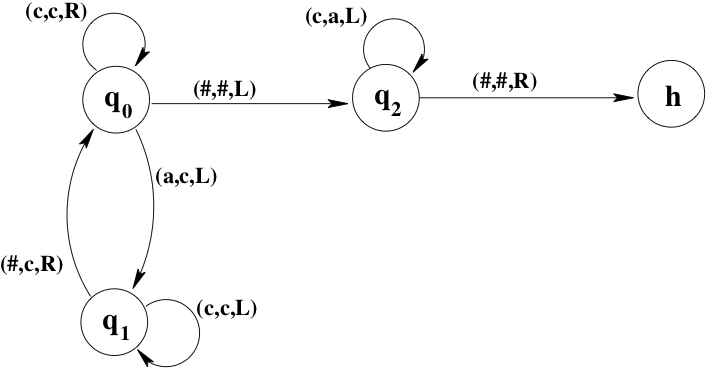

For example, let and define by , for . Then is Turing-computable since it is computed by this Turing machine:

We can also use Turing machines to define "computable languages." There are actually two different notions of Turing-computability for languages. One is based on the idea of Turing-computability for functions. Suppose that is an alphabet and that . The characteristic function of is the function defined by the fact that if and if . Note that given the function , can be obtained as the set . Given a language , we can ask whether the corresponding function is Turing-computable. If so, then we can use a Turing machine to decide whether or not a given string is in . Just run the machine with input . It will halt with output . (That is, it will halt and when it does so, the tape will be blank except for a 0 or a 1, and the machine will be positioned on the 0 or 1.) If the machine halts with output 1, then . If the machine halts with output 0, then .

Let be an alphabet that does not contain # and let be a language over . We say that is Turing-decidable if there is a Turing machine such that , , and for each , when is run with input , it halts with output . (That is, it halts with output 0 or 1, and the output is 0 if and is 1 if .) In this case, we say that decides the language .

The second notion of computability for languages is based on the interesting fact that it is possible for a Turing machine to run forever, without ever halting. Whenever we run a Turing machine with input , we can ask the question, will ever halt or will it run forever? If halts on input , we will say that "accepts" . We can then look at all the strings over a given alphabet that are accepted by a given Turing machine. This leads to the notion of Turing-acceptable languages.

Let be an alphabet that does not contain #, and let be a language over . We say that is Turing-acceptable if there is a Turing machine such that , and for each , halts on input if and only if . In this case, we say that accepts the language .

It should be clear that any Turing-decidable language is Turing-acceptable. In fact, if is a language over an alphabet , and if is a Turing machine that decides , then it is easy to modify to produce a Turing machine that accepts . At the point where enters the halt state with output 0, the new machine should enter a new state in which it simply moves to the right forever, without ever halting. Given an input , the modified machine will halt if and only if halts with output 1, that is, if and only if .

Exercises

Let . Draw a transition diagram for a Turing machine that computes the function where , for . Draw a transition diagram for a Turing machine that computes the function where , for .

Let . Draw a transition diagram for a Turing machine that computes the function where .

Suppose that , , and are alphabets and that and are Turing-computable functions. Show that is Turing-computable.

We have defined computability for functions , where and are alphabets. How could Turing machines be used to define computable functions from to ? (Hint: Consider the alphabet .)

Let be an alphabet and let be a language over . Show that is Turing-decidable if and only if its complement, , is Turing-decidable.

Draw a transition diagram for a Turing machine which decides the language . (Hint: Change the 's and 's to $'s in pairs.) Explain in general terms how to make a Turing machine that decides the language .

Draw a transition diagram for a Turing machine which decides the language and is a multiple of . (Hint: Erase 's at a time.)

Based on your answer to the previous problem and the copying machine presented in this section, describe in general terms how you would build a Turing machine to decide the language is a prime number.

Let be the function such that for each , is the representation of as a binary number. Draw a transition diagram for a Turing machine that computes .

- There is another special type of grammar that is intermediate between context-free grammars and general grammars. In a so-called context-sensitive grammar, every production rule is of the form where . We will not cover context-sensitive grammars in this text.↩

- In fact, Turing machines can be shown to be equivalent in their computational power to pushdown automata with two independent stacks.↩

- The tape of a Turing machine can be used to store arbitrarily large amounts of information in a straightforward way. Although we can imagine using an arbitrary amount of memory with a computer, it's not so easy. Computers aren't set up to keep track of unlimited amounts of data. If you think about how it might be done, you probably won't come with anything better than an infinite tape. (The problem is that computers use integer-valued addresses to keep track of data locations. If a limit is put on the number of bits in an address, then only a fixed, finite amount of data can be addressed. If no limit is put on the number of bits in an address, then we are right back to the problem of storing an arbitrarily large piece of data—just to represent an address!)↩